To deliver fast analysis of their documents to our clients, we need to retrieve a lot of images and their details as fast as possible.

We analyzed and compared the raw performance of several open-source IIIF servers compatible with the API 2.0 specification on specific base operations under different scenarios and image file formats.

All our tests were conducted against containerized versions of the IIIF servers (using Docker), hosted sequentially on the same bare metal server.

Our benchmark provides detailed information on how each server performs on the different scenario. The source code is also available as the project is an Open-source software. Anyone can test their own servers; we have made it possible to easily add new server implementations to the test suite. We also showcase the evolution of the latest IIIF servers updates and their impact on the afore-mentioned performances.

This analysis and benchmarking tool should allow project owners that require high-throughput from IIIF servers to make the best choice for their use-case.

Benchmark specifications

IIIF Servers

In this study, we compared 4 common servers compatible with the IIIF API 2.0 specification:

Measurement environment

All the servers were run through a Docker container in order to make the tests reproductible. At the moment, only RAIS is delivered with an up-to-date docker image. Others servers required us to build our own images for the benchmark, which are available on the registry of the project.

We also tried to implement Go-IIIF and Hymir servers, but they appeared to be too specialized and either required specific image formats or a custom path resolver.

The machine hosting the different servers was a Hetzner CPX41 VPS (8 vCPU, 16Go of RAM) and the benchmark was executed through a VPN with a latency of 46.0ms (σ=0.53).

It was important for us to produce results via a network in a context of distributed processing. Moreover, we observed different data stream strategies among the servers, which could have an impact on results produced through a network.

Servers have been tested in a similar environment, aiming to use the maximum available CPU resource to complete the test. Cantaloupe and RAIS servers used threading by default. We had to spawn multiple processes (10 in the benchmark implementation) for Loris and IIPsrv to benefit from parallel processing.

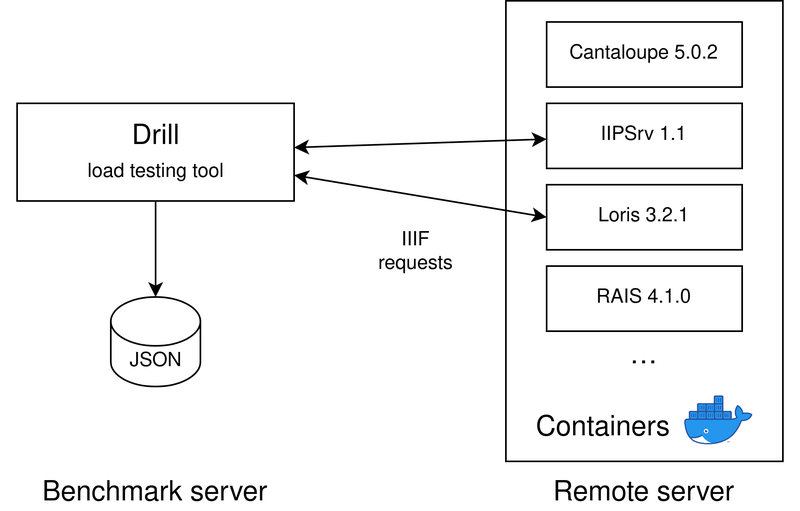

Benchmark tool

We used Drill load testing tool to perform the benchmark. In our opinion this tool was easy to use and adapted to our use case, allowing to play with the concurrency or repetition number during the tests.

A Python script is responsible for generating the scenarios depending on the tested operations, image set and chosen concurrency. The script then run the load test on each running server invoking Drill. Once a load test is finished, the script parses the Drill report to generate, store and compare benchmark results in a JSON format.

Operations

We evaluated the computational efficiency on 4 operations on images we intensely use in our Machine Learning processes:

- Image information (retrieve images size or availability)

- Full image (Segmentation into areas of interest)

- Resize (Classification)

- Crop (Process a section as sub-pages or a text area)

All the servers have been configured not to use cache, so we could refine results with more iterations without altering the response time.

Formats comparison

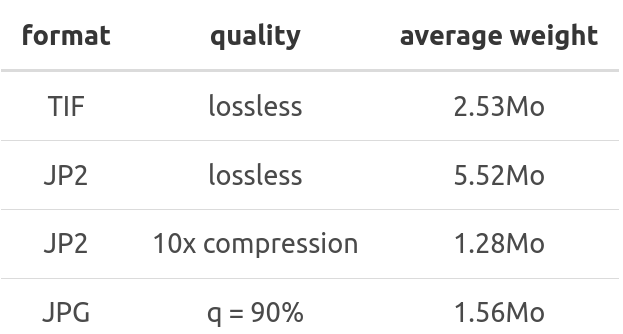

3 formats were used for stored images supported by the IIIF 2.0 specification: JPEG, TIFF and JPEG-2000.

We retrieved a set of 25 TIFF images from the public PPN321275802 Manifest, then we converted those images to JPEG and JPEG-2000 using respectively ImageMagick and libopenjp2-tools.

$ convert sample_01.tif -quality 90 sample_01.jpg

$ opj_compress -i sample_01.tif -o sample_01.jp2

$ opj_compress -r 10 -i sample_01.tif -o sample_01_10x.jp2

To decide which format is the best to store images, we compared the response time on each server with the different formats. Servers have been run with a basic configuration and the OpenJPEG codec. The concurrency was set to 10 to observe servers resilience:

JPEG seems to offers the best performance with similar resolutions, especially when retrieving full resolution images. Crop operations are fast with JPEG 2000, but Loris seems to handle crops even better with JPEGs.

JPEG compression artifacts are not really a problem in our case, as it poorly affect accuracy whereas reduce transfer and processing duration. JPEG is also a widely adopted format, which can avoid further complexity or errors in our processes.

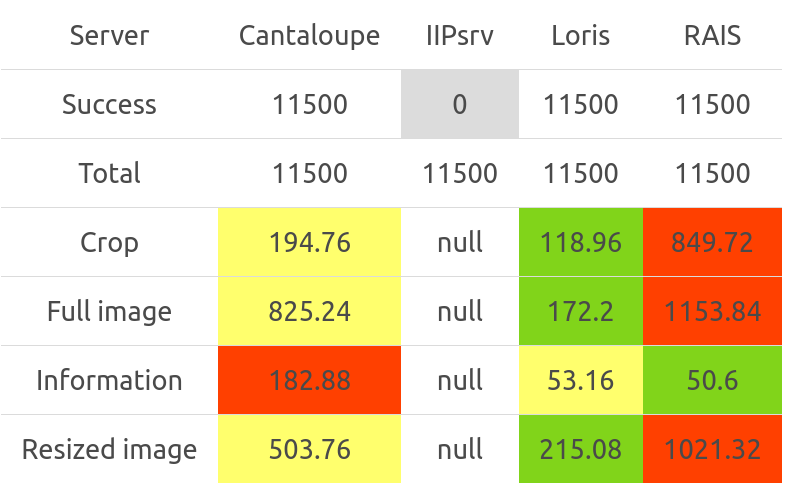

In our specific context, IIPsrv which does not handle JPEG as input format is not the best choice for performance although it seems to have the best results with JPEG-2000 decoding. IIPsrv neither handle simple TIFF images as it requires pyramidal tiles.

Note that the output image format used to compare the response time has been set to JPEG, whatever the input format.

Performances results

Concurrency

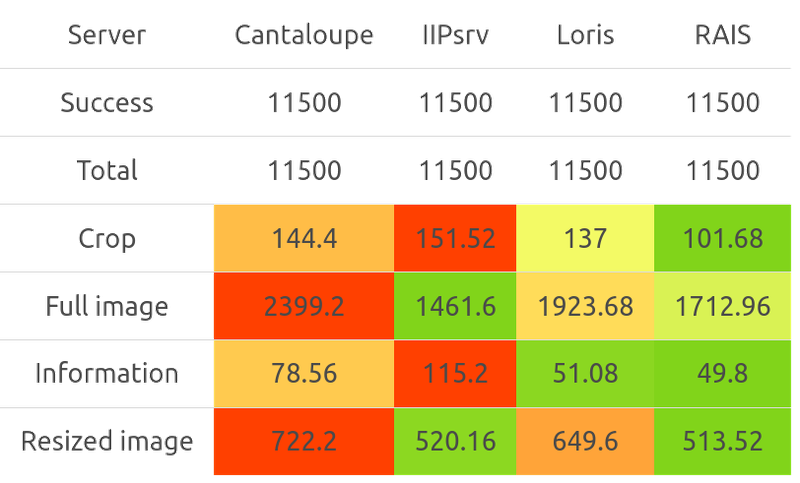

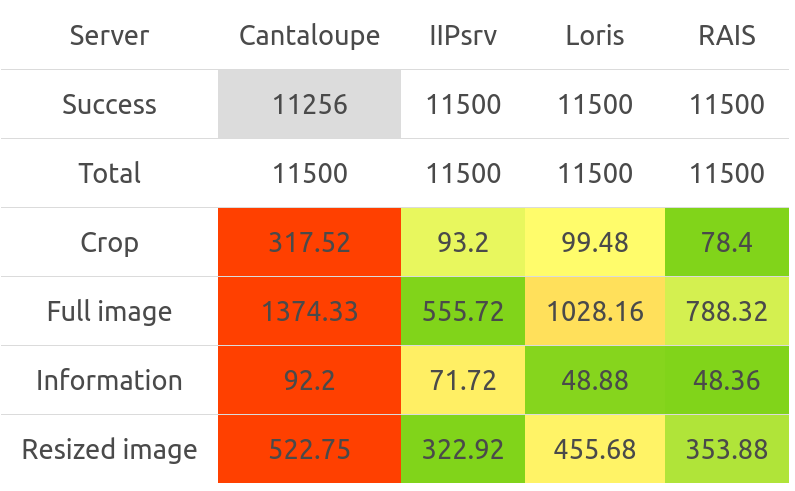

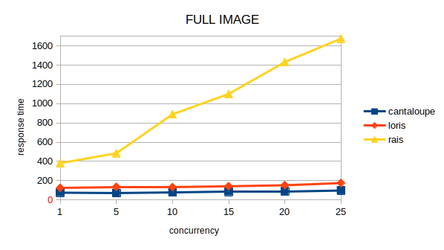

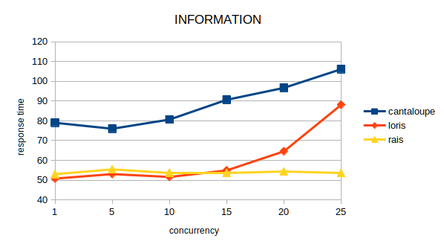

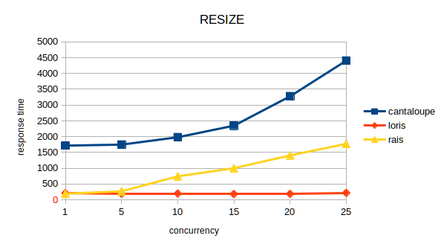

As JPEG seems to be the best format for our need, we compared compatible servers response with different levels of concurrency:

We can observe that RAIS performances drop quickly on most operations. This behavior is caused because of a software limitation decoding JPEGs with ImageMagick causing disk space errors with concurrency.

Loris seems to handle concurrency relatively well compared to Cantaloupe. Cantaloupe is extremely fast serving the full image because it does apply no transformation, which is rather smart but can be less consistent with other operations output.

Decoding libraries

We decided to compare different available libraries to decode images on the Cantaloupe server. This server is easily configurable and offers many options for image decoding.

JPEG

We had to use the 4.1.9 release of Cantaloupe to test ImageMagick and GraphicsMagick, has they have been removed in the next releases.

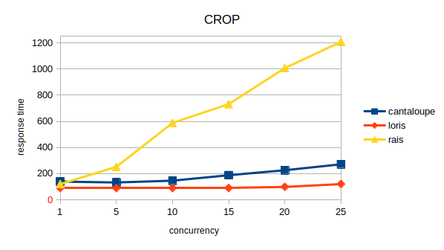

Those results shows that libjpeg-turbo clearly improve decoding performance on JPEGs compared to the default Java2d library:

- Crop: 69.1% faster

- Full image: 29.4% faster

- Information: 58.1% faster

- Resized image: 871.2% faster

Loris, which is based on Pillow, showed rather good performance handling JPEGs too.

Comparison of Loris and Cantaloupe libjpeg-turbo

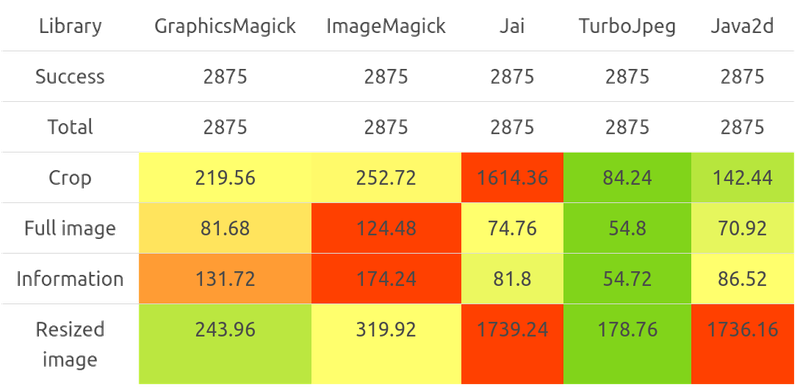

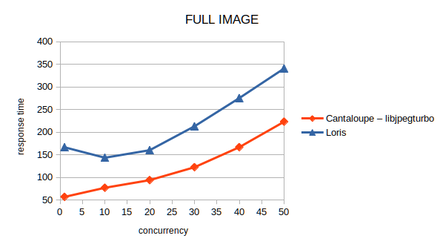

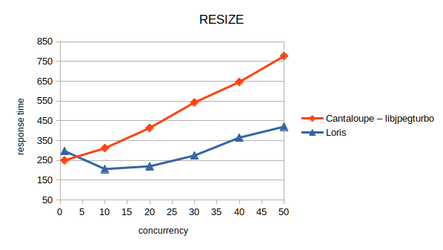

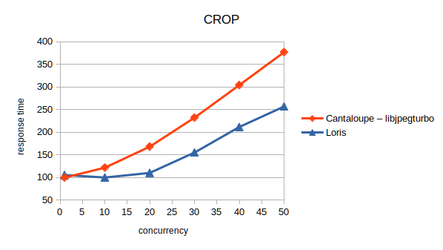

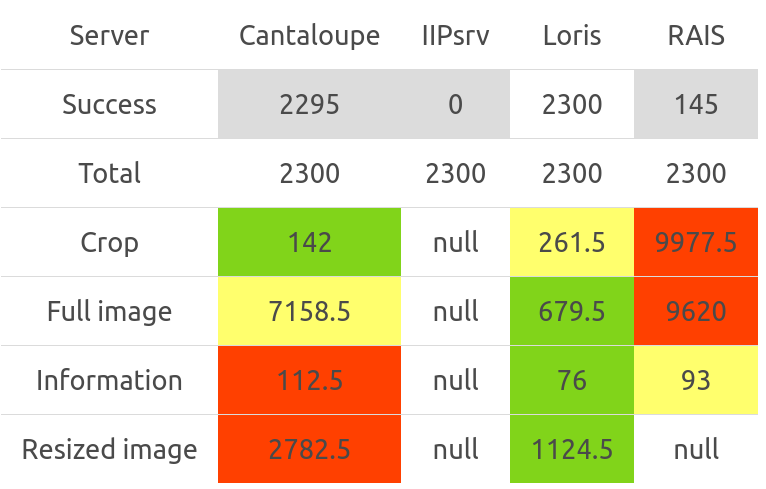

We compared performances between the two most promising servers for JPEGs, with different levels of concurrency:

Those results shows interesting conclusions:

- Cantaloupe strategy on serving source images directly makes it incredibly fast, especially with low concurrency.

- Both servers have rather similar speed for other image processing on low concurrency.

- Loris can significantly improve crop and resize operations with high concurrency (respectively 31.9% and 45.9% with a concurrency set to 50).

Other tests

The generic implementation of the benchmark allowed us to see how certain parameters could influence the performance.

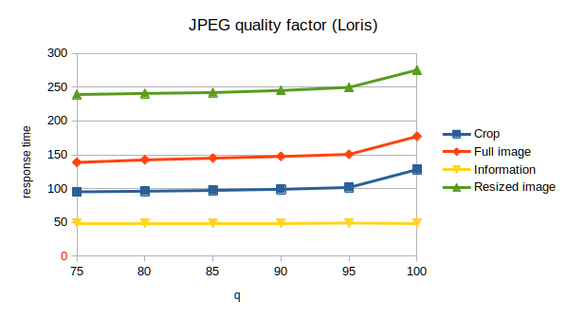

JPEG quality factor

Quality does not have a huge impact on response time between 75 and 95 quality parameters. However setting a quality from 100 to 95 can represent a significant improvement:

- Crop : 26.2% faster

- Full image : 17.7% faster

- Information : 0.41% slower

- Resized image : 10.3% faster

JPEG-2000 decoding libraries

We compared Kakadu and OpenJPEG implementation performances on the Canataloupe server:

Kakadu library makes a hugemore performant for most operations, especially for full and resized images:

- Crop: 16.1% faster

- Full image: 174.7% faster

- Information: 16.5% slower

- Resized image: 236.2% faster

Large source files

Many projects using IIIF uses high resolution images. We ran the benchmark with a set of two images approaching 10,000px to see if it makes an important difference.

- JPG (q = 90%): 11.57Mo

- TIF (lossless): 137.00Mo

- JP2 (lossless): 57.79Mo

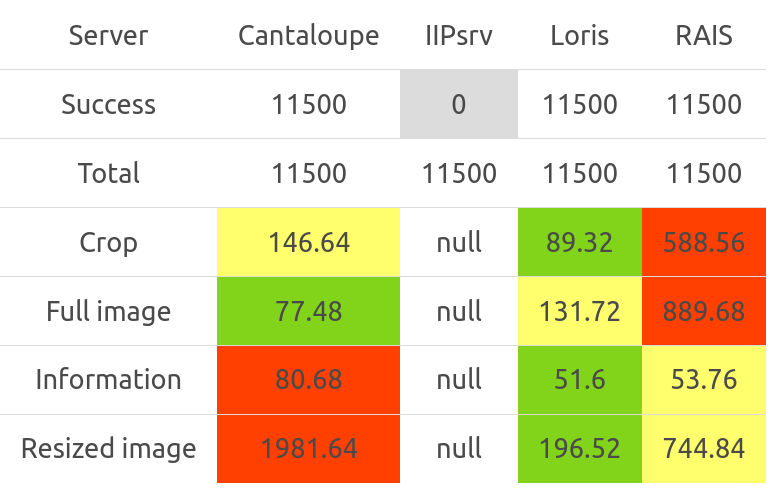

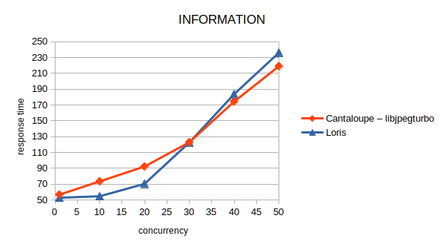

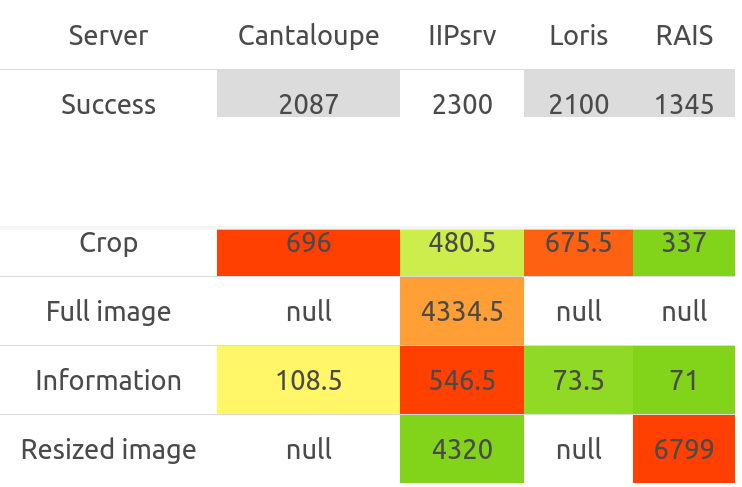

We tested resilience by serving large images on each servers, with a concurrency of 10:

Although TIFF format takes more disk space, it seems to be the average best format for large images. Loris has the best results combined with TIFF. It is the only server that managed to handle this format and JPEG for large files. IIPsrv is the only server that manage to complete all requests with the JPEG-2000 format.

Performances on those 3 cases were close to the single concurrent results, it can be explained because one process is spawn by core on those implementations, and the bare metal server used for the benchmark has 8 cores.

Conclusion

IIIF servers are usually optimized to allow a fast navigation on high quality images. In the context of unpredictable high throughput, results showed that choosing the best format and server depending on the use case could have a major impact on performance. Response time will generally explode with a high concurrency and may cause errors during Machine Learning processes.

We used to have a Cantaloupe server, and a first step would be to update the configuration to use libjpeg-turbo. This change could have a huge impact and make certain jobs 10 times faster. Depending on the need, different comparisons of servers and formats are possible. JPEG, which is a light, fast and widely adopted format, seemed to be the best option to store images in our case.

If we really want to optimize speed on crop and resize operations, we may consider switching to Loris. This server seems to handle concurrency pretty well on those operations, and is closer to our tech stack as written in Python.

The benchmark we created to generate those data is an Open-source and free software. Any re-use or contribution to this project is welcome, and could help to:

- Update a server to a newer release

- Add and compare results with a new server

- Compare a different images set or format

- Test a specific IIIF feature